Getting Started with Deep Learning: ANN

Human brain has the exceptional ability to learn things. If we are able to mimic the working of the human brain on a machine, that would be a huge advancement in technology. Deep learning tries to do just that!

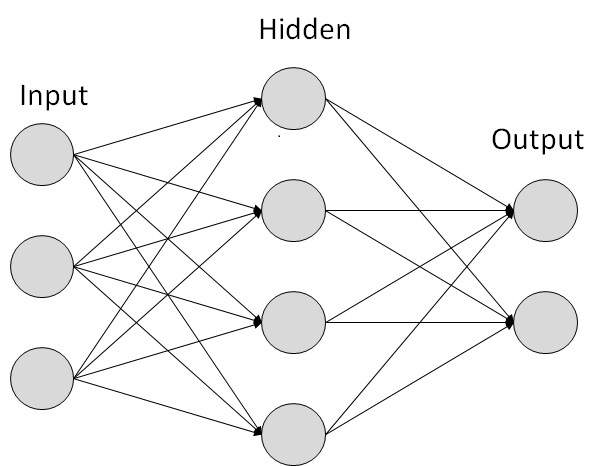

The human brain has billions and billions of neurons. In deep learning, we apply the same concept to make artificial neurons. Basically, there is an input layer where some is provided to get the output, just like the inputs provided from our different senses. This data then passes through several neurons called hidden layers which then produces the output in the output layer.

The above image can be referred to the concept of shallow learning. Deep learning is similar, but it has several hidden layers.

Artificial Neural Networks (ANN)

Let's consider the above figure for understanding a simple artificial neural network. The neurons in the hidden layers get several inputs and they produce an output.

Consider a database that has several rows that represent different examples and several columns that represent the features or attributes of the examples.

Consider a single row (a single example) from the database. The different features of the example will form the input layer. This input layer is connected to the hidden layer. Every link is assigned a random weight initially. The neural network learns by adjusting the weights.

Let $x_{1}, x_{2}, x_{3}, ...$ be the different features and $w_{1}, w_{2}, w_{3}, ...$ be the weights.

The signals go into the neuron where all the weights are added: $\sum_{i=1}^m(w_{i}x_{i})$.

In the next step, an activation function is applied: $\phi(\sum_{i=1}^m(w_{i}x_{i}))$.

There are several activation functions to choose from like sigmoid, tanh, threshold, relu and several others. You can learn more about them here.

Let's say we need to predict something as either possible or not possible, so the output will be either 1 or 0. For this example, we will use the relu activation function for the neurons in hidden layers and sigmoid function function in the output layer.

How do neural networks learn?

Initially, the neural network will calculate the output value $\hat{y}$ with the random weights assigned for all the examples. Using the actual output value $y$ and $\hat{y}$ of all the examples, the neural network will calculate a cost function $C = \sum_{i=1}^m\frac{1}{2}(\hat{y} - y)^2$ which is basically the error.

The goal is to minimize the cost function. It feeds the cost function back to the neural network and then the weights get updated by using gradient descent. This entire process is repeated until we get a minimum value of the cost function. This entire process is called back propagation.

Let's try to build an ANN using python!

Comments

Post a Comment